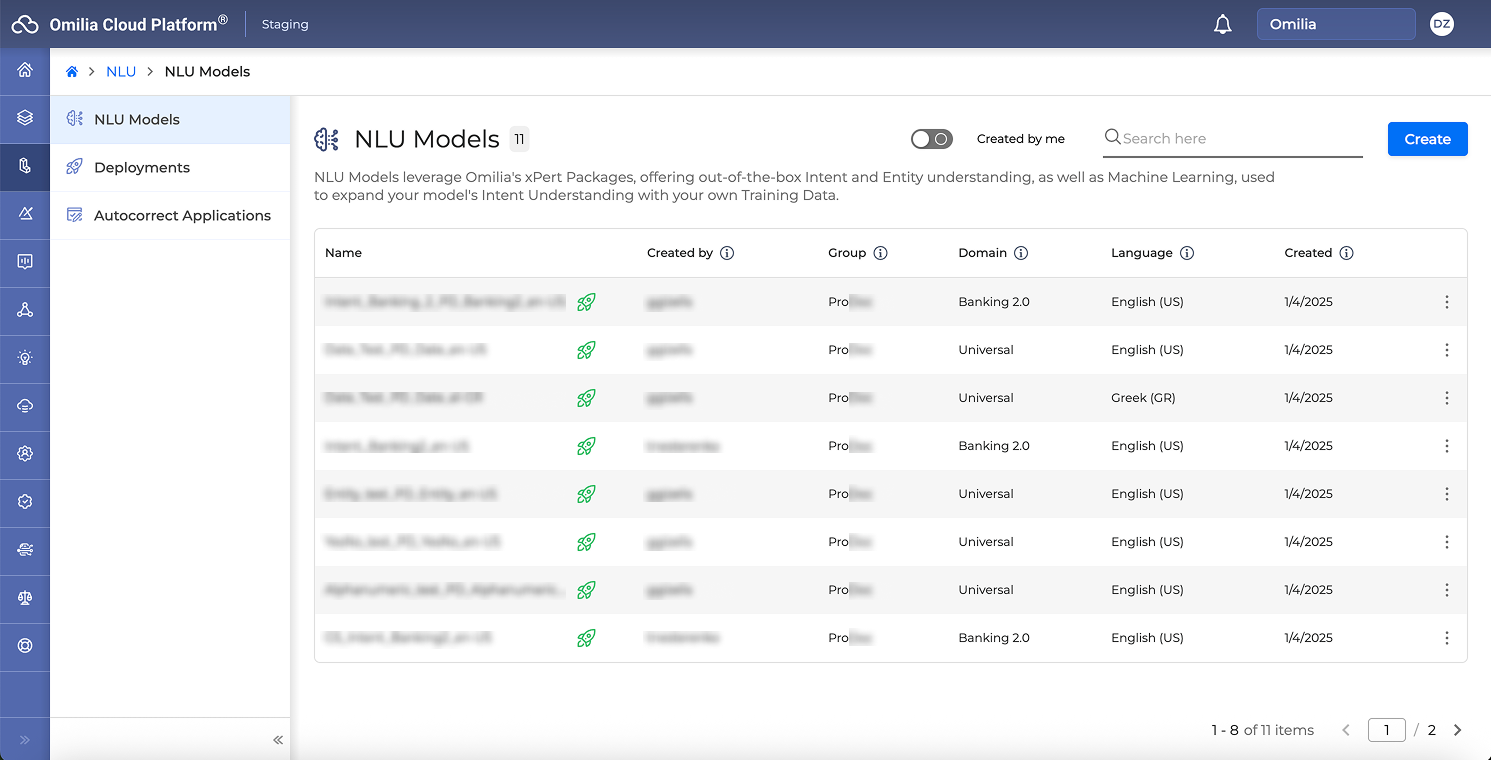

This document describes how to use OCP Conversational Natural Language® User Interface, an intuitive and easy-to-use tool created to build, test and deploy NLU models.

The Natural Language Understanding (NLU) models are necessary to analyze text and extract meaningful entities, entity features, intents, and other syntactic elements. With the help of OCP Conversational Natural Language®, you can leverage both Omilia’s xPacks (Rule-Based Technology, offering out-of-the-box intent and entity understanding), as well as Machine Learning to expand your model’s intent understanding.