NLU Model training

Training a model might be necessary in the following cases:

-

You have added your own custom data to a model, or

-

You have created a custom domain model.

The training process will expand the model’s understanding of your own data using Machine Learning.

You can also read more about training best practices.

If you have added new custom data to a model that has already been trained, additional training is required.

To train a model, you need to define or upload at least two intents and at least ten utterances per intent. To ensure an even better prediction accuracy, enter or upload more than ten utterances per intent.

Deployed NLU models cannot be trained or edited in any way. They are locked and available in the view-only mode.

Training a model

To train a model, follow the guidelines below:

-

Navigate to NLU → NLU Models section and click on a selected model. The model drill-down page opens.

-

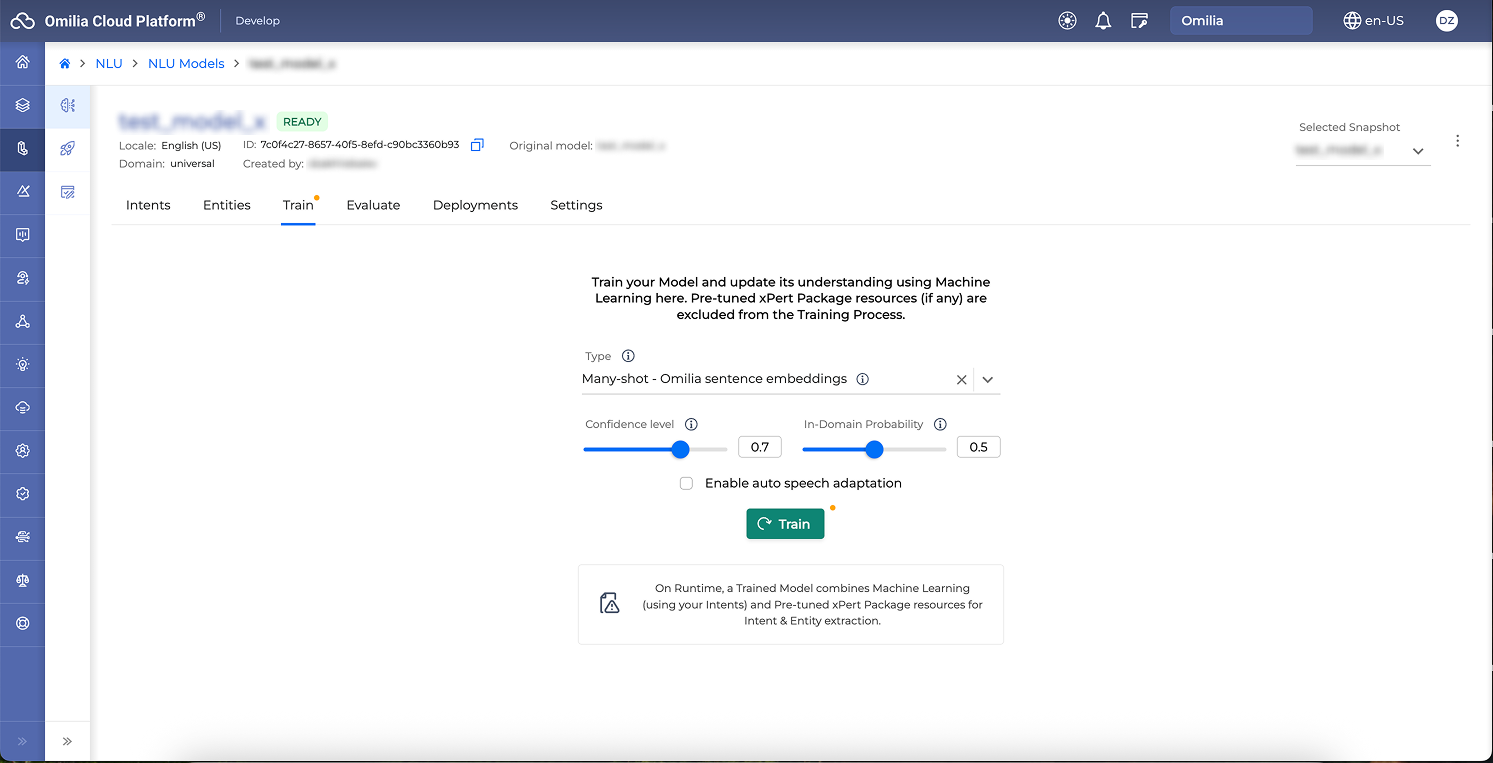

Open the Train tab.

If the Train page is greyed out, the model has already been trained.

-

Select Type of the Machine learning encoders. You can train your model with different encoders and select the one that works best for you. The performance will increase as the size of the training set increases. Depending on the selected language, the following types are available:

-

Many-shot - Omilia sentence embeddings: Available in English. Works best for Omilia domains (for example, Banking, Energy, Telecommunications, and so on) leveraging transformer encoders with pre-trained domain-specific embeddings.

-

Many-shot - English sentence embeddings: Recommended for non-Omilia domains in English. Leverages transformer encoders with generic English embeddings.

-

Many-shot - Multilingual sentence embeddings: Suitable for all domains in languages other than English.

-

-

Select Confidence level. The confidence level defines the accuracy level needed to assign intent to an utterance for the Machine Learning part of your model (if you’ve trained it with your own custom data). By default, the confidence level is 0.7. You can change this value and set the confidence level that suits you based on the quantity and quality of the data you’ve trained it with.

The more data you train your model with, the more accurate it will be, so a more loose Confidence level (around 0.7 - 0.9) can be used. For models with a small volume of training data, a higher Confidence level must be used to avoid false predictions.

-

Select In-Domain Probability. The in-domain probability threshold lets you decide how strict your model is with unseen data that are marginally in or out of the domain. Setting the in-domain probability threshold closer to 1 will make your model very strict to such utterances but with the risk of mapping an unseen in-domain utterance as an out-of-domain one. On the contrary, moving it closer to 0 will make your model less strict but with the risk of mapping a real out-of-domain utterance as an in-domain one.

-

Optionally, you may enable ASR Adaptation.

-

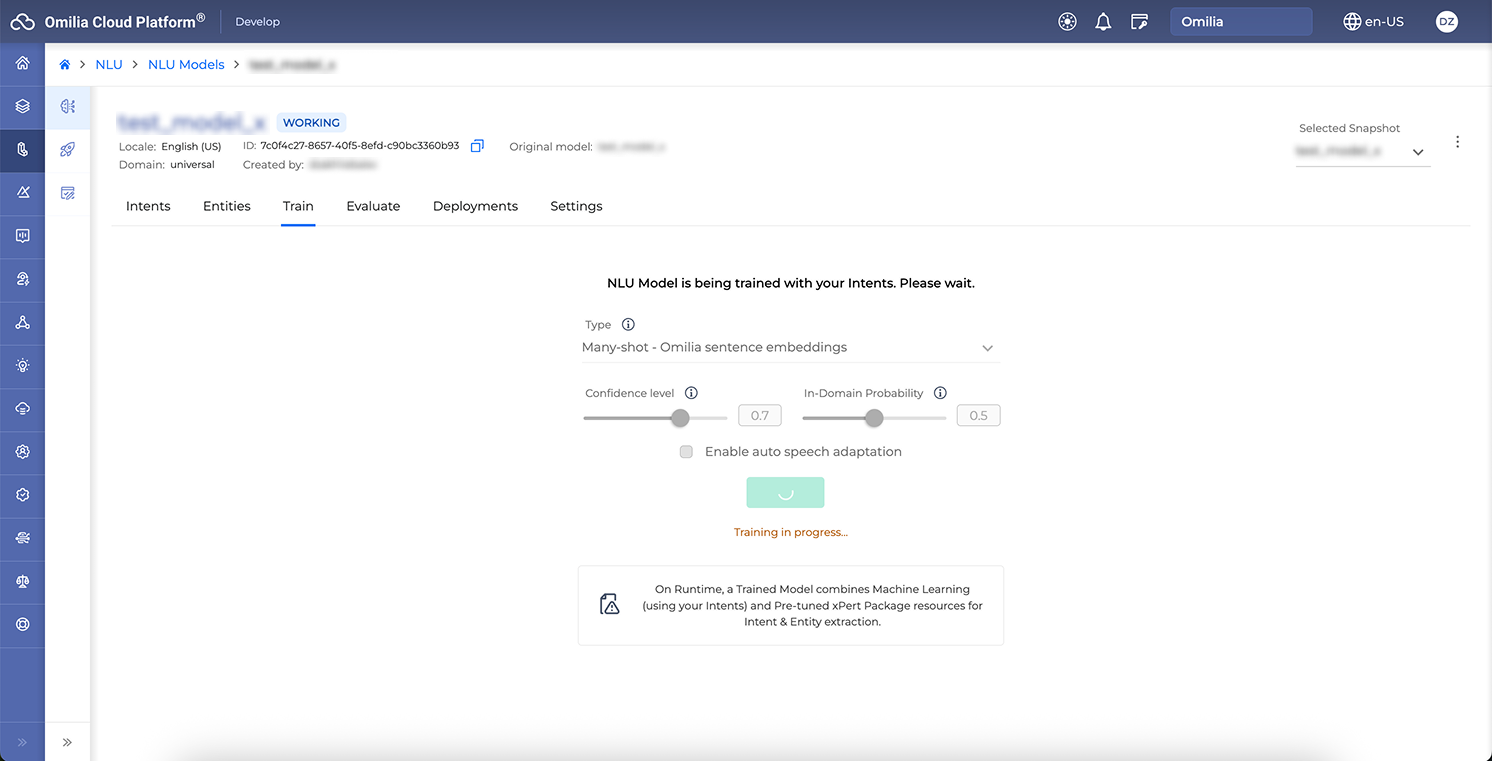

Click the Train button to start the training process. The model status changes to Working.

Depending on the training data scope, the training process can take up to several minutes.

-

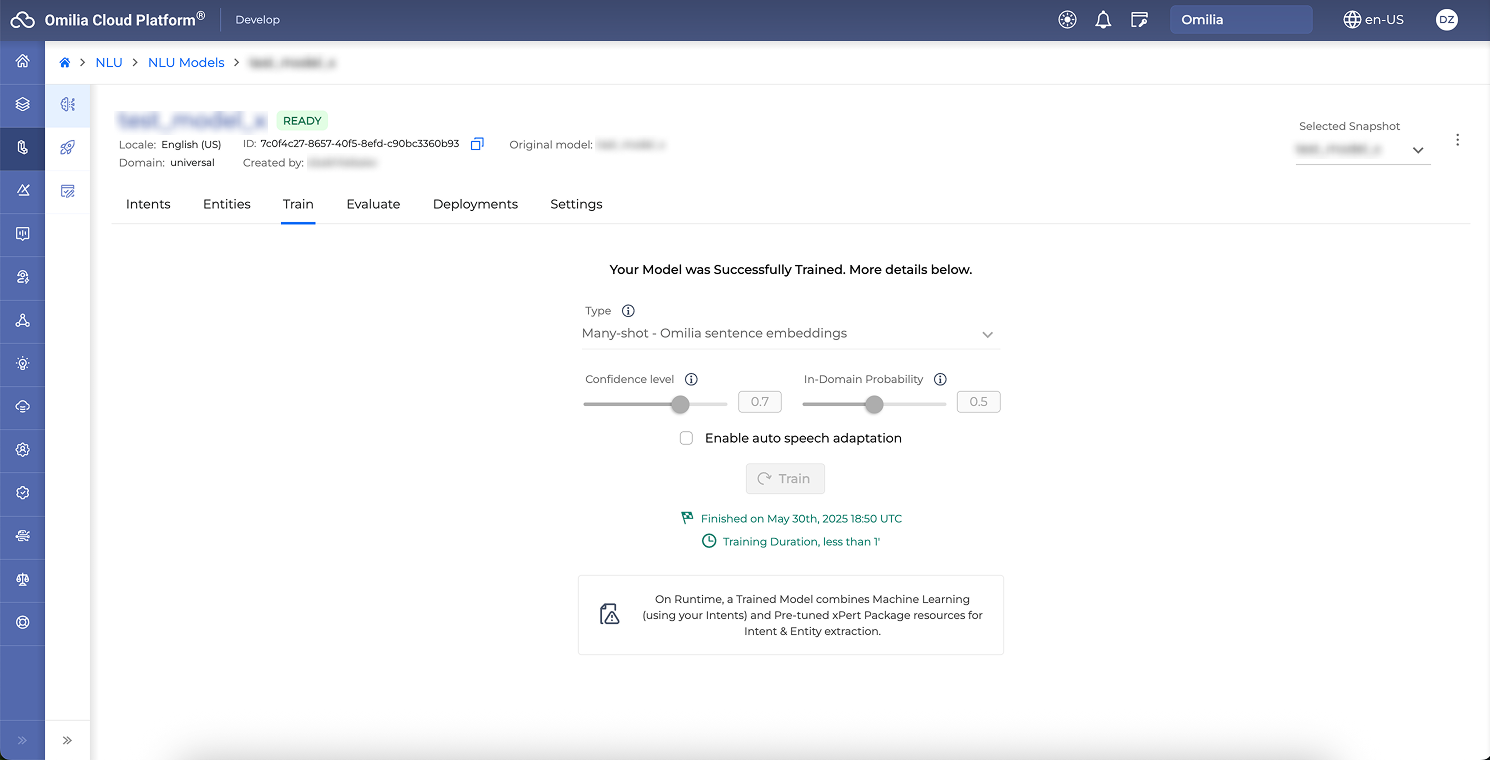

When the training is completed, the model status is set to Ready.

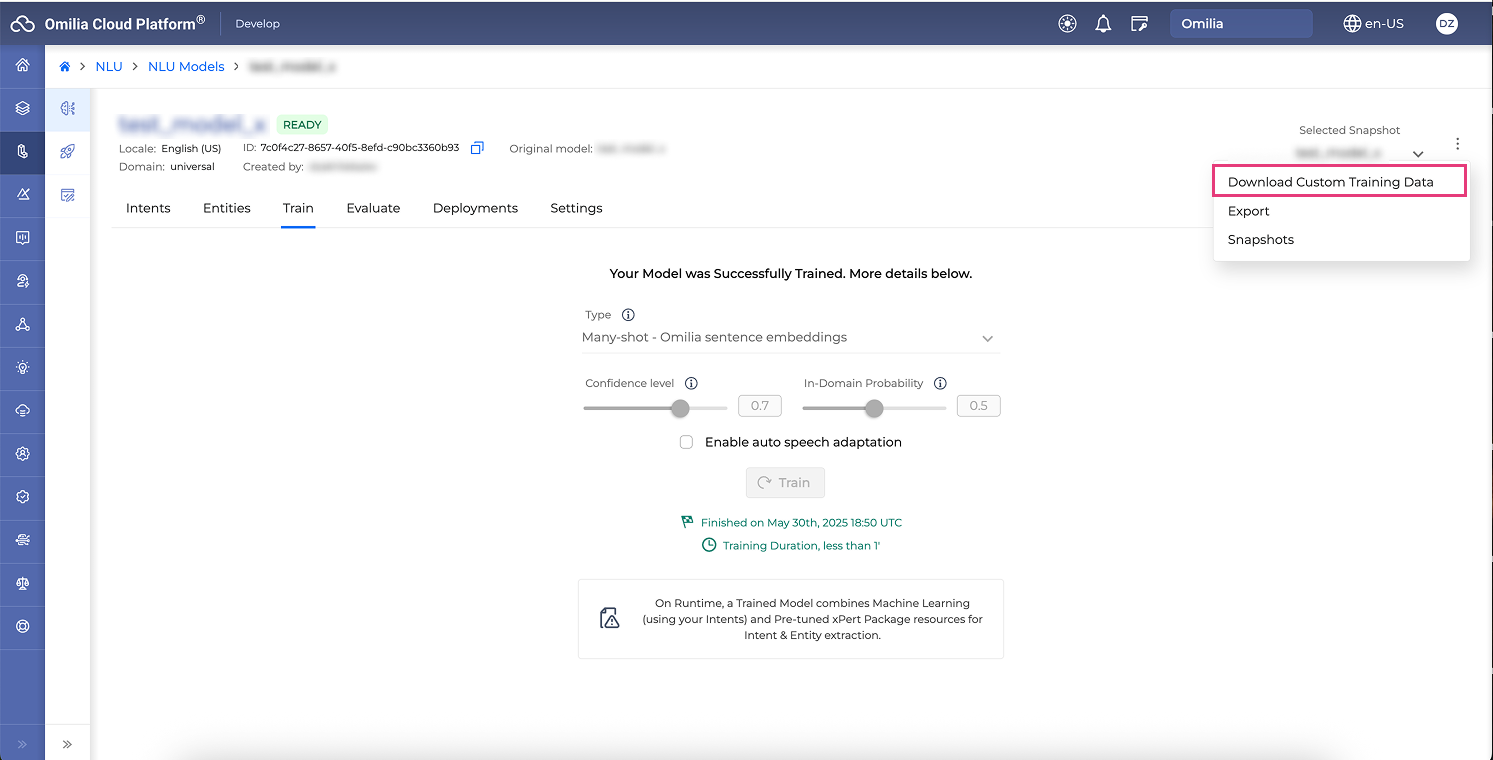

Downloading custom training data

To download your custom training data as a CSV file:

-

Navigate to NLU → NLU Models section.

-

Select a model from the list of available models and click on it to open.

-

Click the Options menu icon next to the Selected Snapshot.

-

Select Download Custom Training Data.