Data Compliance

Omilia OCP® Data Warehouse and Exports API comply with General Data Protection Regulation (GDPR) requirements. For any data type exported from OCP through Exports API, the OCP users are responsible to ensure that their exported data is handled accordingly and meets necessary regulations.

Overview

Export Service is a dedicated service that allows OCP users to gain access to their raw application data. The Export API provides two distinct functionalities: Batch Export and Streaming Export.

For availability and billing details of Audios functionality (Batch and Streaming), please contact your Account Manager, as additional charges may apply.

Read more about the Export Service features in the Export Service User Guide.

Export API is crucial for OCP users who want to retrieve, export, and manage their raw application data within the specified constraints and authentication mechanisms. Here is a brief overview of what Export API could assist with:

-

Exporting Call Detail Records (CDRs)

-

Exporting Voice Biometrics data

-

Exporting Whole Call Recordings (WCRs)

-

Exporting Audit data

Supported data types and their respective file types can be found in Export Service User Guide | Supported Data

For Batch Export users can require multiple data types to be included in their export.

Streaming Export follows a streaming subscription per type per OCP group model.

Audit events may currently be available in specific environments. Please contact your Account Manager for more details.

Export Data user cases

Here are the key reasons for exporting data:

-

Retention Policy: Omilia enforces a 60-day retention policy for compliance purposes; to prevent data loss, it is imperative to export and store the data in your individual database.

-

Flexible Data Transfer: Seamlessly transfer data to a library of your choice, ensuring immediate and unrestricted access for your specific requirements.

-

Custom Metric Development: Empower your analytics by creating personalized metrics tailored to your unique business needs, leveraging the exported data for comprehensive insights and reporting capabilities beyond the scope of the reports that OCP already offers.

General Info

Exports API is organized around HTTP REST. Below you will find information that applies towards the usage of the Exports API in general.

Authentication

Navigate to the API Authentication to learn more about Authorization Token Endpoint and Authentication process.

Error Codes

Omilia's HTTP response codes can be found on the HTTP Code Responses page.

For specific to Exports API HTTP response codes and examples check the API Reference chapter.

Rate Limiting

Exports API has a rate limiting mechanism which applies a threshold of requests per group for a specific time window for all endpoints.

If the limit is exceeded, the API will respond with an error response of Status Code 429 (Too many requests).

For the Batch Export, it’s possible to download a maximum of 5 ZIP files within a minute. Otherwise, the downloading process will hit rate limiting.

Batch Export

The batch export functionality is targeted towards users that require to retrieve their raw application data in an on-demand manner.

Functionalities

-

Request historical data for specific date ranges

-

Track export status:

-

SUBMITTED -> The export request was submitted successfully.

-

PROCESSING → Export API started processing the request.

-

READY → Once the batch export request is available to be downloaded.

-

EXPIRED → Once a batch export is no longer available to be downloaded.

-

-

Get export metadata (file count, size) once a batch export is ready (status=READY)

-

In order to programmatically consume the data, a user can utilize these metadata to create a proper iteration plan.

-

-

Download data as compressed ZIP files.

-

Export multiple data types in single request.

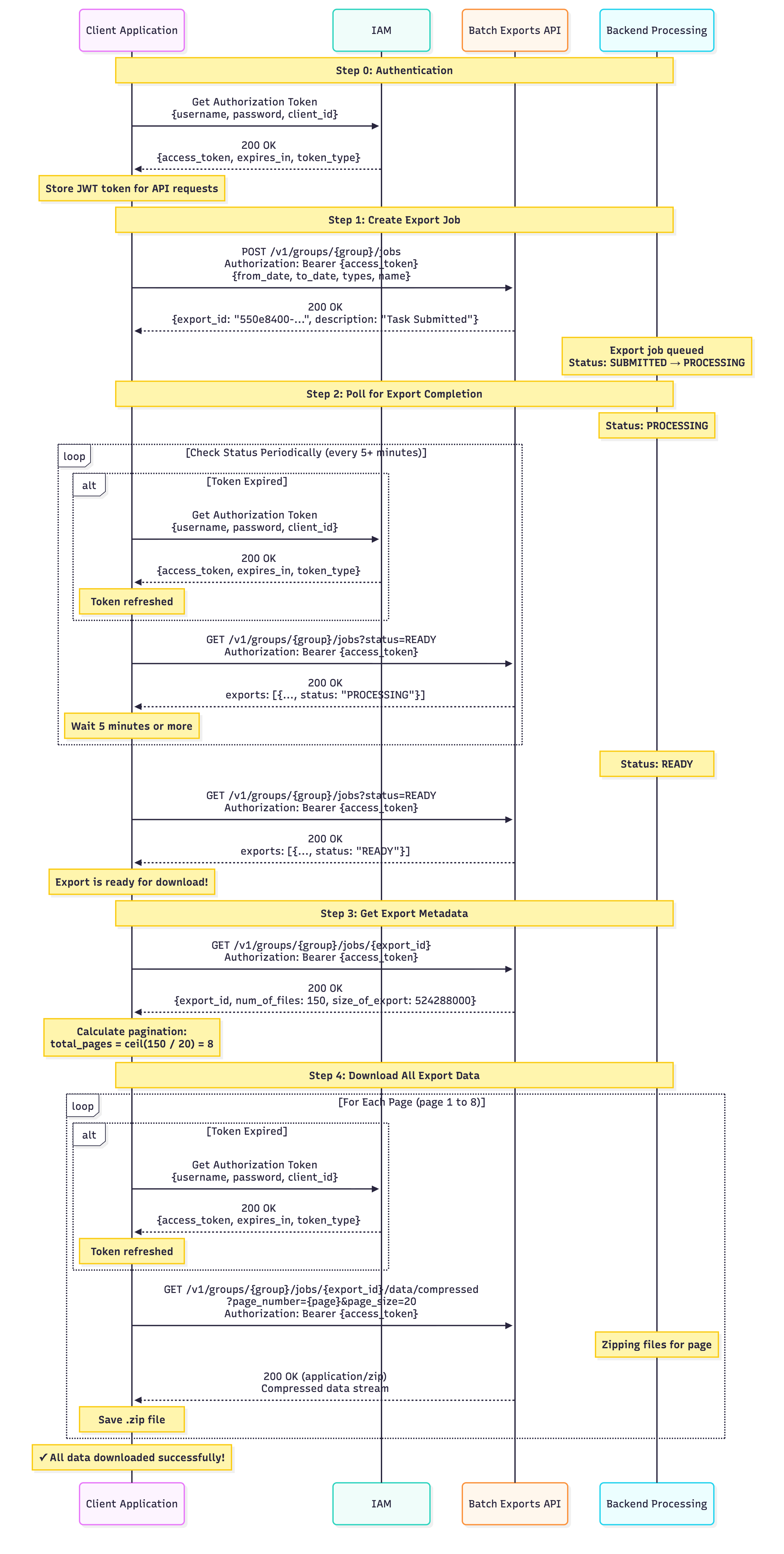

Iteration plan

Once the batch export is ready for download an iteration plan must be configured.

Assume the following response when checking the export metadata:

{

"export_id": "550e8400-e29b-41d4-a716-446655440000",

"num_of_files": 500,

"size_of_export": 524288000

}

The client has decided to use page_size=50.

In this setup client needs to send 10 requests with the below configuration in the request headers:

-

page_number=1 &page_size=50 -

page_number=2 &page_size=50 -

…

-

page_number=10 &page_size=50

The final result would be 10 .zip files containing 50 files each.

We conclude that: ceil(num_of_files/page_size) = number of iterations required

Changing the page_size while iterating will lead to inconsistent or duplicate results

Sequence diagram

Below you can find a sequence diagram that showcases step by step how to consume data using Batch Export programmatically.

Constraints

-

Maximum date range: 2 days per export (minutes/seconds ignored)

-

Minimum date range: 1 hour (minutes/seconds ignored)

-

Historical limit: Up to 2 months in the past

-

Data freshness: Data requested must be at least 2 hours old

-

Concurrent exports: Only 1 running (status = RUNNING) export per group can be active

-

Data retention: 24 hours after completion, then auto-deleted (status = EXPIRED)

Minutes/seconds/milliseconds will not change the export (example, 2023-01-12T12:00:00.000Z to 2023-01-12T13:00:00.000Z is equal to 2023-01-12T12:10:00.000Z to 2023-01-12T13:20:00.000Z).

Output

Directories

Once the export is un-zipped a user will find a directory/subdirectories that adheres to the following pathing:

Exports

-> message type (for example,message_type=vb_enrolment)

-> date= data time of exported data (for example,date=2024-07-18-12)

-> group = OCP Group name (for example,group=myGroup)

-> the name of the file (for example, vb_enrolment+1+0001276400.json)

Full path example:

~/exports/message_type=vb_enrolment/date=2024-07-18-12/group=vb-qa-dev/vb_enrolment+1+0001276400.json

Please avoid using paths in your scripts

You may notice in your outputs an additional underscore directory “/_/” between exports and message_type, for example exports/_/message_type

Format

For exports with JSON filetype (non-WCR), the files extracted from the ZIP files are in the JSON Lines format. This is a format for storing semi-structured data that may be processed one record at a time. It is essentially a sequence of JSON objects, each on its own line, which makes it well-suited for streaming and processing large datasets.

JSON Lines is text-based, meaning that it's in plain text format. More information about it can be found in the JSON Lines documentation.

For exports that contain WCR (Whole Call Recordings), the ZIP will contain .wav files.

Example

// Example of JSON Lines format

{"asr":{"mrcp_session_id":"","rec_uri":"","vad_uri":"","mrs_uri":"","bio_uri":""},"rejections":0,"end_type":"FAR_HUP","channel":"miniapps","device_type":"undefined","contact_id":"","locale":"en-US","destination_uri":"","no_inputs":0,"duration":9780,"steps_num":3,"exit_point":"","test_flag":false,"id":"","agent_request":0,"kvps":[{"step":"1","value":"undefined","key":"callId"}],"no_matches":1,"events":"","flow":{"parent_name":"","parent_step":0,"version_tag":"","type":"Banking","uuid":"","path":[],"account_id":"","diamant_app_name":"MiniApps","parent_id":"","organization":"","name":"","id":"","root_step":0},"group":"","direction":"","exported":false,"app":{"name":"","version":2009485},"origin_uri":"8","session_id":"","message_type":"dialog_end","exit_attached_data":{},"diamant":{"id":"","region":"","version":"9.11.0"},"connection_id":"","hashed_origin_uri":"","time":1763012220333,"metrics":[],"same_states":0,"synopsis_uri":""}

{"asr":{"mrcp_session_id":"","rec_uri":"","vad_uri":"","mrs_uri":"","bio_uri":""},"rejections":0,"end_type":"FAR_HUP","channel":"miniapps","device_type":"undefined","contact_id":"","locale":"en-US","destination_uri":"","no_inputs":0,"duration":1817,"steps_num":2,"exit_point":"","test_flag":false,"id":"","agent_request":0,"kvps":[{"step":"1","value":"undefined","key":"callId"}],"no_matches":0,"events":"","flow":{"parent_name":"","parent_step":0,"version_tag":"","type":"Banking","uuid":"","path":[],"account_id":"","diamant_app_name":"MiniApps","parent_id":"","organization":"","name":"","id":"","root_step":0},"group":"","direction":"","exported":false,"app":{"name":"","version":2009485},"origin_uri":"b","session_id":"","message_type":"dialog_end","exit_attached_data":{},"diamant":{"id":"","region":"","version":"9.11.0"},"connection_id":"","hashed_origin_uri":"","time":1763012220572,"metrics":[],"same_states":0,"synopsis_uri":""}

This above is a simple example on the format expected from the export. For specific fields expected please visit Call Data Records (CDRs) | OCP® Learning Center.

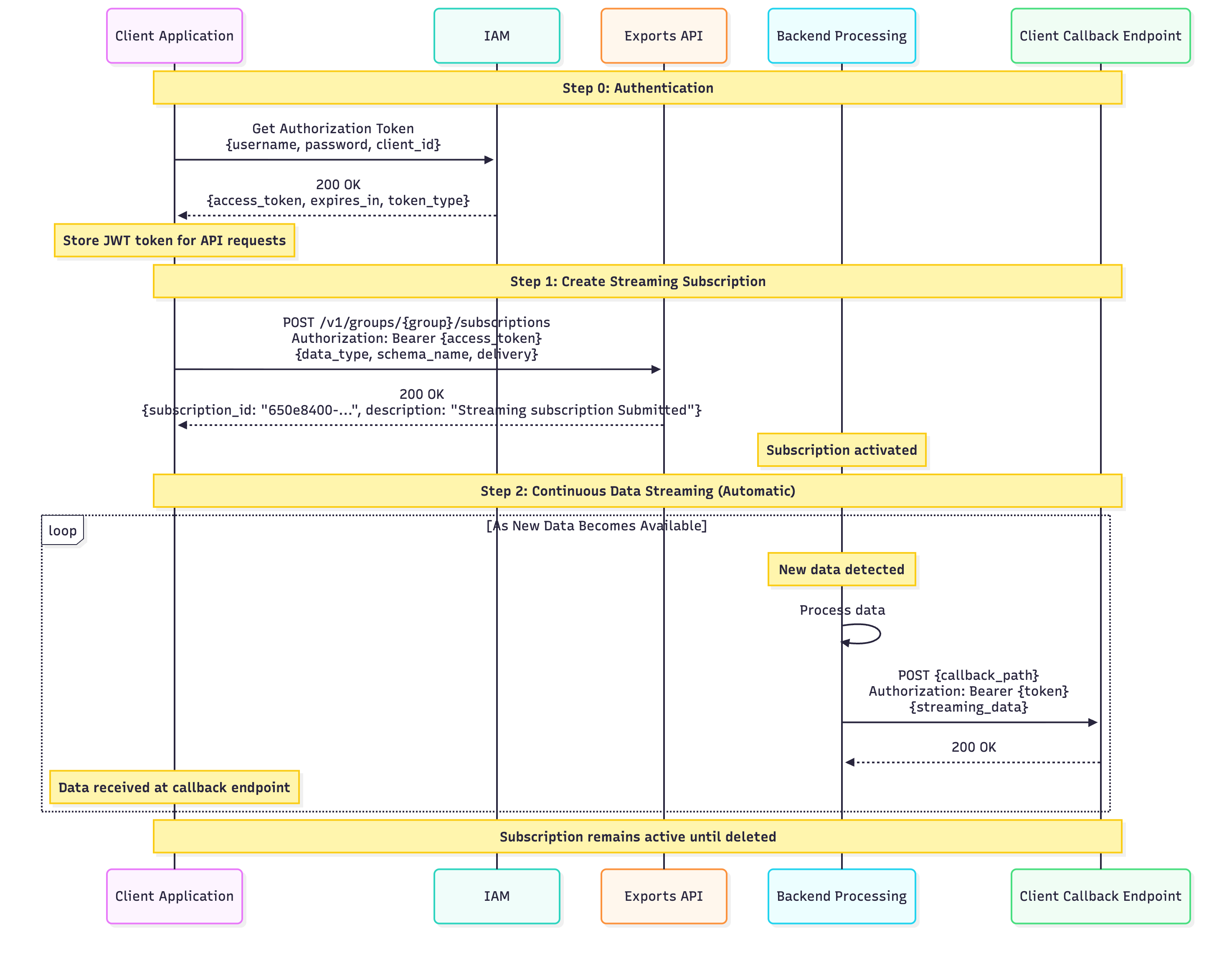

Streaming Export (Subscriptions)

Subscription functionality within the Export Service of Omilia OCP® empowers users to subscribe to message channels, facilitating the receipt of near real-time data streams. Read more about how to use subscriptions in the Export Service User Guide.

Streaming Exports API is organized around HTTP REST for the management of the subscriptions.

To get access to Export Subscriptions, please contact your Account Manager.

Although it aims to support multiple delivery methods, currently the streams are delivered via HTTP requests sent to the client’s public API gateway.

Each group is allowed to have only one running streaming export at a time for each message type (for example, dialog_step, dialog_start). After the successful creation of the subscription, the data starts streaming in the clients provided callback path.

We use built-in HTTP authentication to validate users based on groups/roles stored in IAM.

Requirements

In order to consume the near real-time raw data a user requires to have set up:

-

An OAuth2 endpoint for authentication → Will be used from the Exports API to receive a JWT token that will be used in the subsequent requests that push data.

-

A receiving data POST endpoint → Must be publicly accessible and will be used from the Exports API to push the data.

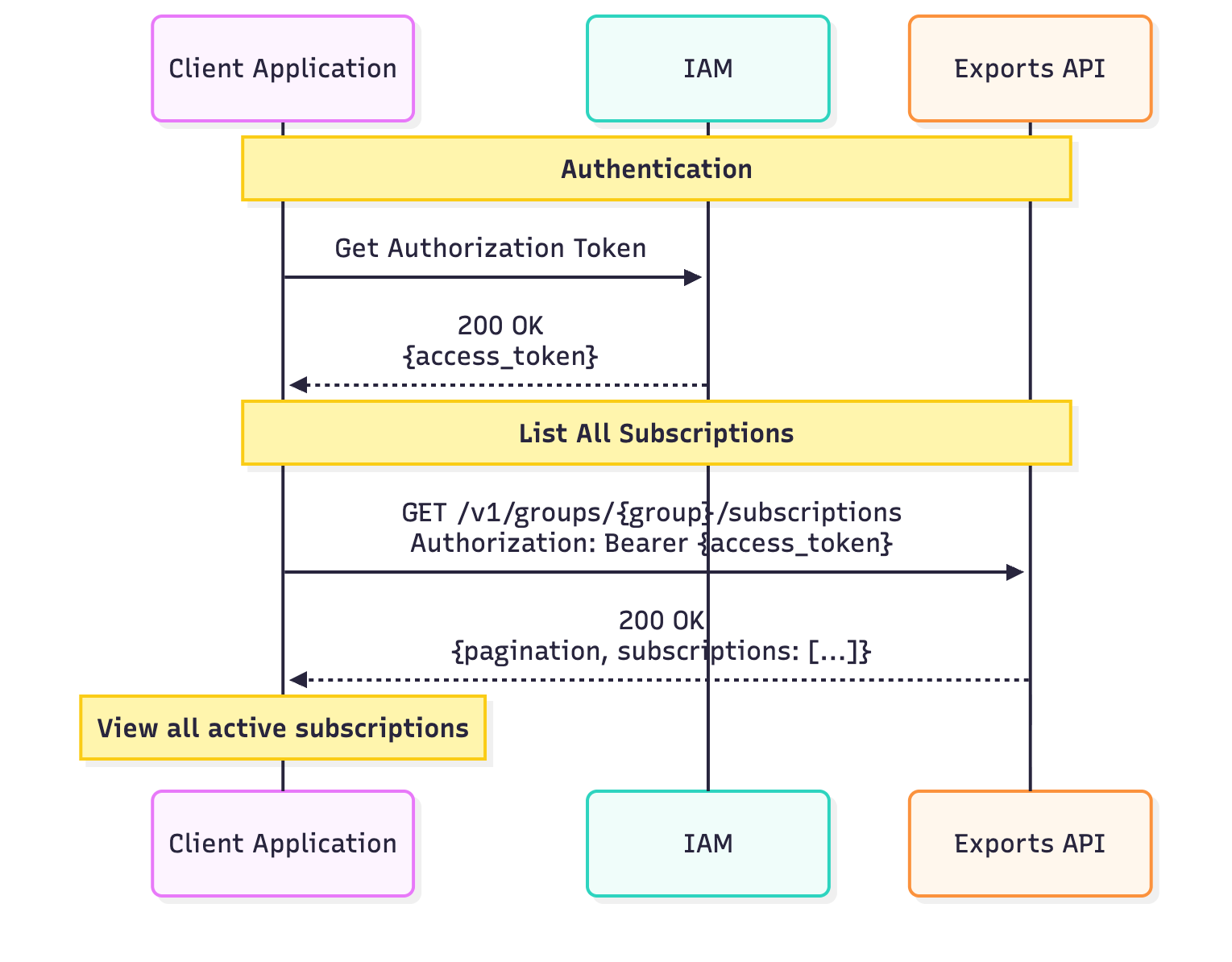

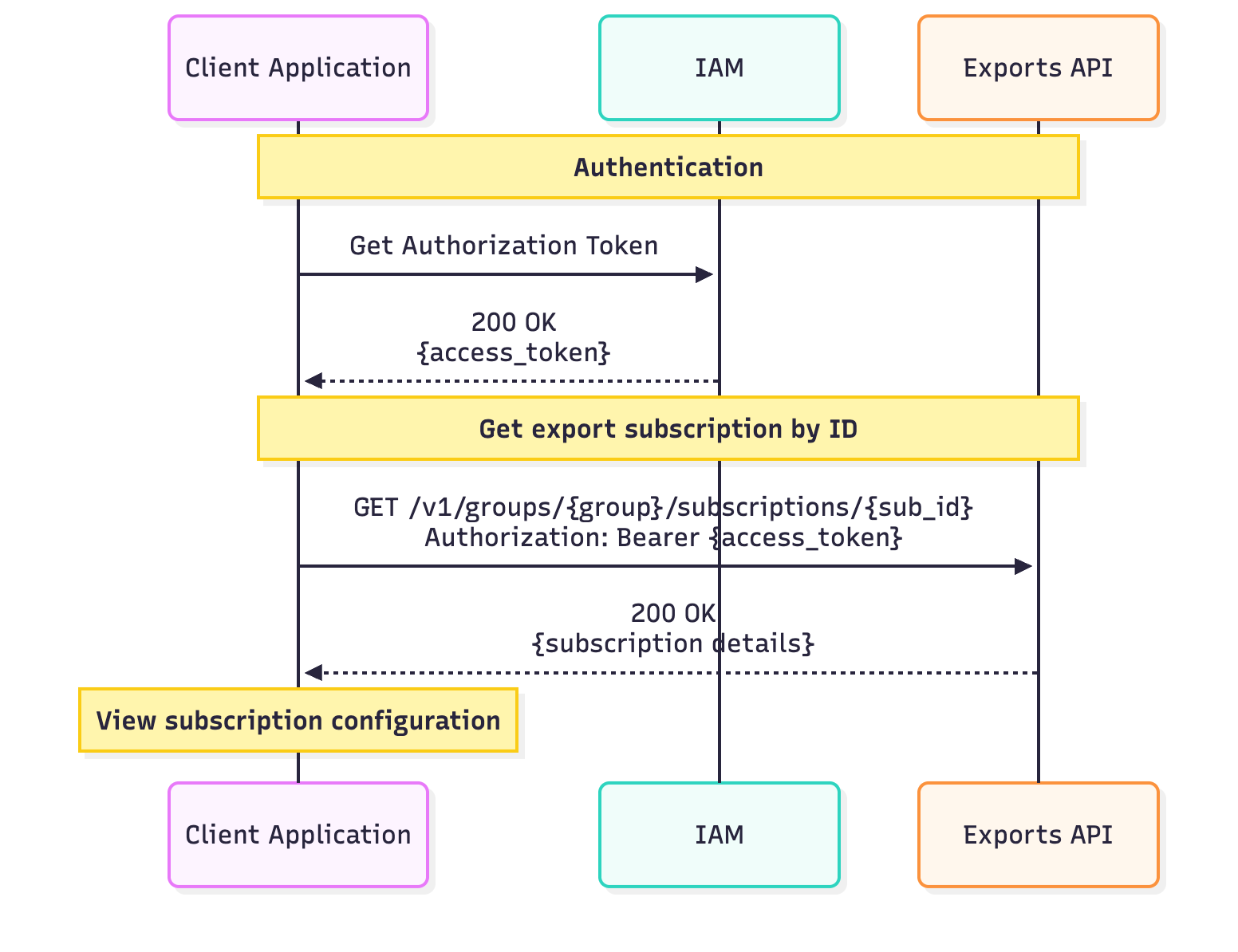

Functionalities

-

Create subscriptions for specific data types

-

Check the current configuration of an active subscription

-

Update a subscription’s configuration

-

Delete an active subscription

-

Real-time continuous data delivery to customer HTTP endpoint

-

Automatic retries upon client’s API errors (Reconciliation Process)

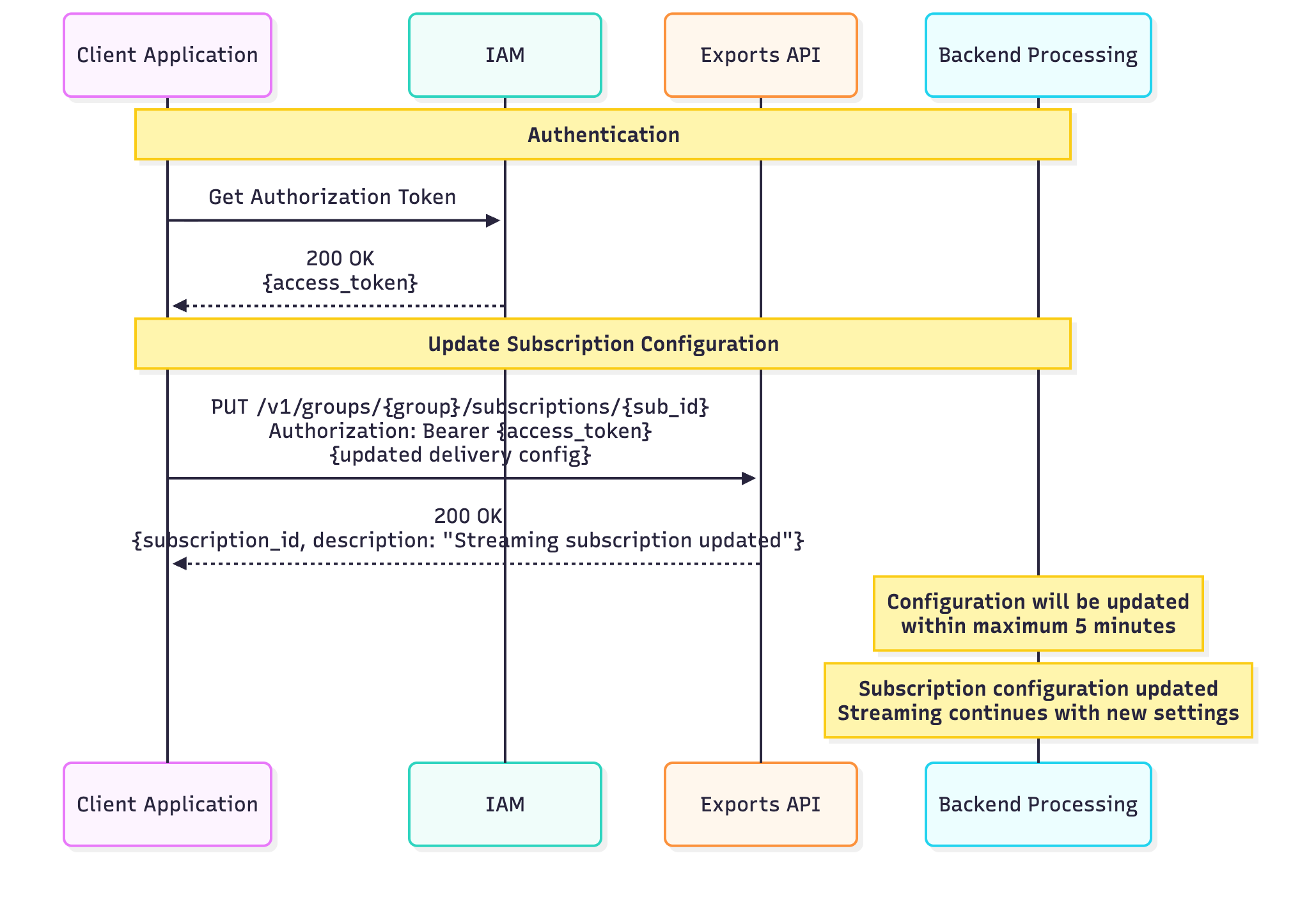

Updating a streaming subscription’s configuration works with eventual consistency.

From the time a user updates the configuration, it might require approximately 5 minutes for the Export API to synchronize and start using the new configuration.

During that time the customer’s server is REQUIRED to support BOTH the previous and the updated configurations to avoid data loss.

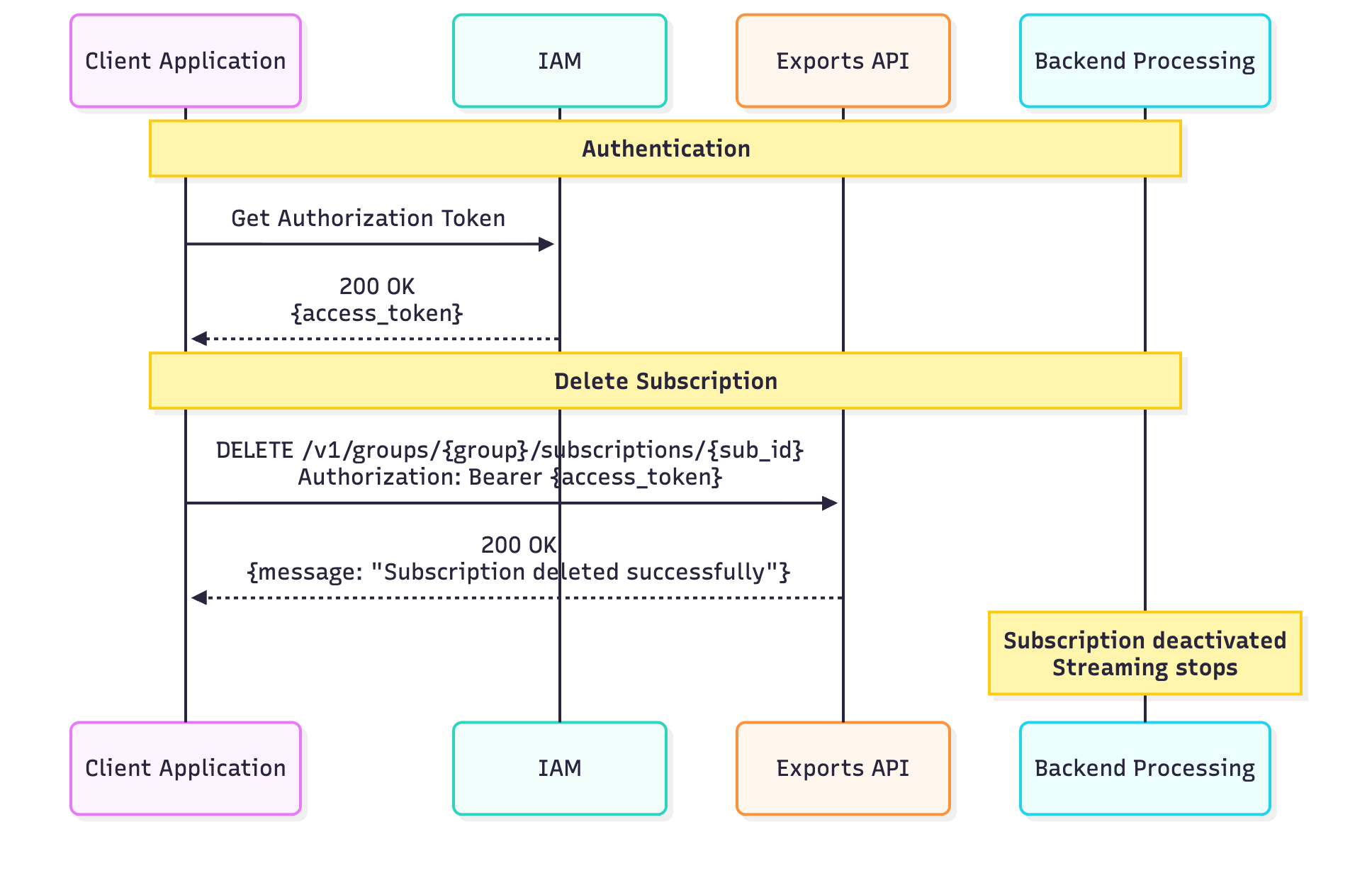

Verify configuration

Before deleting old configurations, ensure they are no longer in use to prevent service interruptions.

-

API URLs: Verify that the old URL is not receiving any requests.

-

OAuth2 Credentials: Confirm that the old user/credentials are no longer authenticating active requests.

Sequence diagrams

Below you can find a sequence diagrams that showcase the operations you can do to manage your streaming subscriptions.

Reconciliation Process

Exports API offers a reconciliation process for messages that were dropped due to customer's API unavailability or other kind of failures.

Reconciliation process can fetch up to 24 hours of data, meaning it can run even if the client’s API is down for up to 24 hours. The reconciliation mechanism is being triggered automatically, approximately within 10 minutes, after the clients API is back online.

The reconciliation process will run on the background to fetch older data, while the stream continues to send newly generated data. Newly generated data is handled with priority over older data, but the streams of old and new data run in parallel. The reconciliation time may vary depending on both the current stream load and the amount of older data that needs to process, bounded by client’s API rate limiting requirements.

For cases when the client's API was down more than 24 hours, we recommend using the Batch Export to fetch older data.

Constraints

-

One subscription per data type per group: Cannot have multiple subscriptions for same data type

-

Delivery guarantee: At-least-once (customer must handle duplicates)

-

Valid response: Exports API expects a valid JSON Object response from the client API

-

API gateway capability: The API must be capable of handling the total aggregate data volume, calculated as the data generated per call multiplied by the expected number of concurrent calls

Output

Here are some examples of the data that the client will start receiving after successfully creating a streaming exports subscription.

JSON example

Here is an example request that Exports API sends to the client’s API while streaming JSON data.

"request": {

"method": "POST",

"headers": {

"User-Agent": "ReactorNetty/1.2.11",

"Content-Type": "application/json",

"Accept": "application/json;v=1",

"Client-Corelation-Id": "436c095c-9bf0-4177-a5d5-bf9cea22effc",

"Authorization": "Bearer <JWT>",

"Content-Length": "5840"

},

"body": ""

}

On the body part, the events are expected in JSON format like the following snippets showcase.

"messages" array might include multiple JSON CDRs.

The events have the corresponding subscription type inside messages.

In the following example, the type is the start of the CDRs JSON files, whilst "my_ocp_start_cdrs" is the name selected for the export subscription.

{

"schemaName": "my_ocp_start_cdrs",

"sessionId": "5c2b9dc1-899a-4124-97f5-b7e3e02a0660",

"sessionStartingSequenceNumber": "0",

"messages": [

{

"app":

...

"message_type": "dialog_start",

...

},

{

...

},...

]

}

In the following example containing VB data, the type is the start of the CDRs JSON files, whilst "my_ocp_vb_verification" is the name selected for the export subscription.

{

"schemaName": "my_ocp_vb_verification",

"sessionId": "5c2b9dc1-899a-4124-97f5-b7e3e02a0660",

"sessionStartingSequenceNumber": "0",

"messages": [

{

"app":

...

"message_type": "vb_verification",

...

},

{

...

},...

]

}

WCR (.wav) example

Here is an example request that Exports API sends to the client’s API while streaming whole call recordings.

"request": {

"method": "POST",

"headers": {

"User-Agent": "ReactorNetty/1.2.11",

"Accept": "application/json;v=1",

"Client-Corelation-Id": "379950fb-9141-4f3e-a3ad-2e212971e42b",

"Authorization": "Bearer <JWT>",

"X-OML-ORN": "storage:dw_qa:5684ea55-332c-4156-a36a-6f5d5fdc406d:wcr:s3:us-east-1:2025/11/27/12/e0a121ef1db64e398c1b9b2935fc4cdd45dc9ff6.1764246885953.e1597a55aff9452cbdb4ba570b115562.wav",

"Content-Type": "application/octet-stream",

"Transfer-Encoding": "chunked"

}

}

Last part of the X-OML-ORN header is the dialogID of the root flow.

Content-Type in the WCR is application/octet-stream

Request body in this case is a Binary audio file content (.wav)

Prerequisites

Before you can use the Exports API, please ensure the following prerequisites are met:

-

An active OCP account with the appropriate group access (OWNER group permissions)

-

A Group name

-

baseUrl [ see Exports API | API Reference ]

-

For example, https://us1-a.ocp.ai

-

-

Authorization (either one of):

-

Username / password [ see API Authentication ]

-

Personal Access Token (PAT) [see Personal Access Token (PAT) Authentication]

-

The Manage Tokens policy is required to generate a PAT

-

Postman, web version or an installed application (optional, required just for the examples)

API Reference

Endpoints from the organization-controller is only available for VFO environment. Different collection endpoints are tied to specific environments, which may be accessible to different customers. For more information, please contact your Account Manager.